Minor macro wizardry: Lisp metaprogramming and why you should care

Motivation

Metaprogramming is one distinguishing feature of "advanced" programming languages. Rails is famous for its clever uses of metaprogramming techniques, and Template Haskell gives support for compile-time evaluation.

However, these techniques are not new. A long time ago, in a company far, far away, a very clever programmer (and now CEO of a seed accelerator named after a certain fixed-point combinator) wrote the book on how these techniques can be used to improve our code.

He wrote the book On Lisp.

Pun totally intended!

Why Lisp?

Why choose a dated and perhaps dead (sorry, Clojure fans) language to describe these techniques?

The answer is in a Latin-sounding word that's thrown around in programming language forums a lot: homoiconicity.

Refresher on your Compilers course

Grossly speaking, the front-end of a compiler does the following:

Lexical analysis

Collects input characters and turns them into "tokens" (grossly corresponding to a "word" in the language). This is what a tool such as Flex does.

In Ruby, you can use Ripper (built-in) to check how a lexed program looks like:

require 'ripper' require 'pp' pp Ripper.lex(<<-EOF 5.times do |n| puts n * n end EOF ) # => [[[1, 0], :on_int, "5"], # [[1, 1], :on_period, "."], # [[1, 2], :on_ident, "times"], # [[1, 7], :on_sp, " "], # [[1, 8], :on_kw, "do"], # [[1, 10], :on_sp, " "], # [[1, 11], :on_op, "|"], # [[1, 12], :on_ident, "n"], # [[1, 13], :on_op, "|"], # [[1, 14], :on_ignored_nl, "\n"], # [[2, 0], :on_ident, "puts"], # [[2, 4], :on_sp, " "], # [[2, 5], :on_ident, "n"], # [[2, 6], :on_sp, " "], # [[2, 7], :on_op, "*"], # [[2, 8], :on_sp, " "], # [[2, 9], :on_ident, "n"], # [[2, 10], :on_nl, "\n"], # [[3, 0], :on_kw, "end"], # [[3, 3], :on_nl, "\n"]]

Syntactic analysis

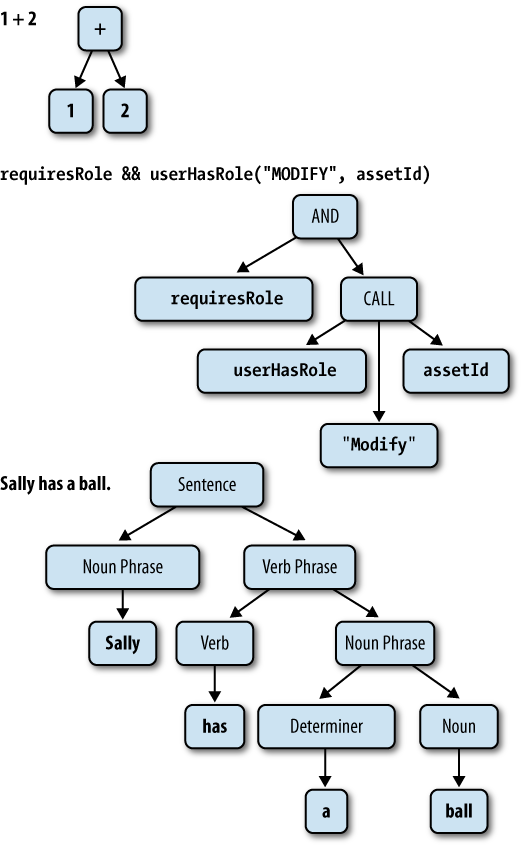

Uses the information from the step above to build a hierarchical structure - usually a parse tree or, as more usually known, an abstract syntax tree (AST for short).

Easier to understand this structure with a picture:

Again, there are examples in Ruby, using the same module. Using the same code as above, we can see something like this:

require 'ripper' require 'pp' pp Ripper.sexp(<<-EOF 5.times do |n| puts n * n end EOF ) # [:program, # [[:method_add_block, # [:call, [:@int, "5", [1, 0]], :".", [:@ident, "times", [1, 2]]], # [:do_block, # [:block_var, # [:params, [[:@ident, "n", [1, 12]]], nil, nil, nil, nil, nil, nil], # false], # [[:command, # [:@ident, "puts", [2, 2]], # [:args_add_block, # [[:binary, # [:var_ref, [:@ident, "n", [2, 7]]], # :*, # [:var_ref, [:@ident, "n", [2, 11]]]]], # false]]]]]]]

This structure is what gets actually run by Ruby - it's converted to YARV bytecode, optimized a bit, then executed (details are left to the reader).

Notice what we just did in this example: we transformed a stream of characters into a data structure that can be manipulated INSIDE the language. This structure is named a symbolic expression, s-expression, or, if you really want to be terse, sexp.

Only problem is, I don't really want to do that. I don't know how Ruby maps to this "weird" notation, and even if I did, working with all these arrays seems tedious…

Homoiconicity, for real

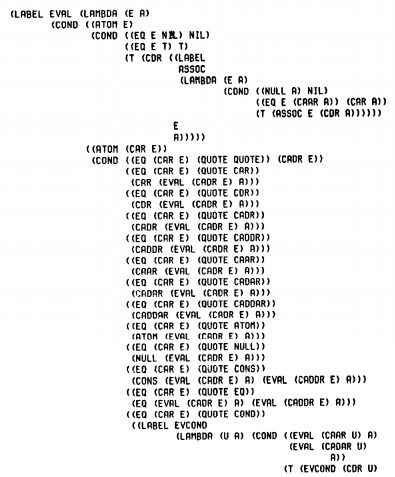

Imagine if there was an interpreter for sexps. One that took s-expressions as input, and executed them as any other programming language. Also imagine that this interpreter was the simplest one that you've ever seen: one that fit in one page of code. Don't believe such a thing could exist?

This is the definition of eval (taken from this article) for the original Lisp interpreter, written by Steve Russell (who also coded one of the first videogames in the world, Spacewar!):

So, paraphrasing the well-known Haskell joke about monads:

Lisp is just an evaluator for abstract syntax trees that implement the lambda calculus!

Now we have a very interesting language in our hands: one that can manipulate itself, like Ruby (and any language with a parser), but whose syntax maps (almost!) one to one to the same data structure used to represent it. This means that manipulating a Lisp program with another Lisp program is almost trivial.

Macro time!

So, why so much buzz about Lisp macros?

Programs that manipulate other programs are kind of a big deal. On the theoretical side, we can implement whole compilers by successful phases of transformations on ASTs1 (and it seems that someone already did).

What macros can (and usually) do

On the practical side, these are the main reasons we'd want to do this:

Custom syntax

This means trivial things such as implementing anaphoric variants of common control structures, to creating new operators that change the evaluation order of their arguments.

Compile-time code analysis and optimization

My favorite real-life example of such techniques is CL-PPCRE, the regex library.

Implementing completely new languages on top of your host language

DSLs are as easy as they are in, say, Ruby, and with the added benefit of possibly being optimized during compile-time. If you take it too far, you might end with a completely different language such as Lux or Shen…

Quasiquotation, or "how the f#$! do I write macros conveniently?"

In the Ruby examples above, we discussed how inconvenient it would be if we had to traverse and rewrite all those trees in our metaprogram. Here's an example of what it would look like in Scheme (shamelessly taken from this paper2):

(define array-size 1337) (define array-name "array-name") (define init-val "init-val") (list 'do '(i 0 (+ 1 i)) (list (list '>= 'i array-size)) (list 'vector-set! array-name 'i init-val)) ;; (do (i 0 (+ 1 i)) ((>= i 1337)) (vector-set! "array-name" i "init-val"))

This is tedious, and prone to error for bigger structures ("is it two or three calls to 'list' that I need?"). Fortunately, there's quasiquotation, that, despite the weird name, is just a "template language" for sexps.

Using quasiquotes, the code above can be written thus:

(define array-size 1337) (define array-name "array-name") (define init-val "init-val") `(do (i 0 (+ 1 i)) ((>= i ,array-size)) (vector-set! ,array-name i ,init-val)) ;; (do (i 0 (+ 1 i)) ((>= i 1337)) (vector-set! "array-name" i "init-val")) ;; exactly the same as above!

Tips for writing macros

These are mostly taken from this awesome presentation by David Nolen, a famous programmer in the Clojure community.

- Write a function that generates the expansion you want

- Write unit tests for that function

- Wrap it in a defmacro for syntactic goodness

Check the expansion using macroexpand facilities (Emacs helps a lot here)

Real-life example: this small macro I wrote to complement a Spacemacs macro:

(defmacro dcl/add-env-toggle (toggle-var toggle-key &optional toggle-on-expression) (let ((toggle-var-interned (intern (s-replace "_" "-" (downcase toggle-var)))) (toggle-on (or toggle-on-expression "true"))) `(spacemacs|add-toggle ,toggle-var-interned :status (getenv ,toggle-var) :on (setenv ,toggle-var ,toggle-on) :off (setenv ,toggle-var nil) :evil-leader ,(concat "ot" toggle-key) ,@(if toggle-on-expression (list :on-message `(format "%s's value is now %s" ,toggle-var (getenv ,toggle-var)))) ))) (macroexpand-1 '(dcl/add-env-toggle "RUBY_PROF_MEASURE_MODE" "rm" (completing-read "Measure mode (default: wall): " '(wall process cpu allocations memory gc_time gc_runs)))) ;; (spacemacs|add-toggle ruby-prof-measure-mode :status ;; (getenv "RUBY_PROF_MEASURE_MODE") ;; :on ;; (setenv "RUBY_PROF_MEASURE_MODE" ;; (completing-read "Measure mode (default: wall): " ;; '(wall process cpu allocations memory gc_time gc_runs))) ;; :off ;; (setenv "RUBY_PROF_MEASURE_MODE" nil) ;; :evil-leader "otrm" :on-message ;; (format "%s's value is now %s" "RUBY_PROF_MEASURE_MODE" ;; (getenv "RUBY_PROF_MEASURE_MODE")))

Example: Anaphoric macros

Groovy language, a Ruby-like language for the JVM, has an interesting feature: whenever a method that accepts a block, such as map, is given no explicit arguments, it automatically creates a variable "it" scoped to the block value.

In other words:

[1, 2, 3].map { it + 1 } # => [2, 3, 4]

This is referred to as an anaphora - named after the linguistic device of the same name.

Lisp doesn't have those built-in, but it's straightforward to add them. For instance, here's an anaphoric version of if, courtesy of the Emacs helm package:

(defmacro helm-aif (test-form then-form &rest else-forms) "Anaphoric version of `if'. Like `if' but set the result of TEST-FORM in a temporary variable called `it'. THEN-FORM and ELSE-FORMS are then excuted just like in `if'." (declare (indent 2) (debug t)) `(let ((it ,test-form)) (if it ,then-form ,@else-forms)))

Notice that this macro is capturing scope, given that its expansion can "shadow" the value of a pre-existing "it" variable.

There are some techniques to use and avoid this, and I refer the reader to chapter 9 of "On Lisp" for a good discussion.

Again, I want to highlight how important this is. If we want a new feature in our language, we don't need to wait for the language designers to add it. Just create a macro and be done with it.

This is the highest level of abstraction known so far - the compiler is as accessible to the standard programmer as any other library.

A digression

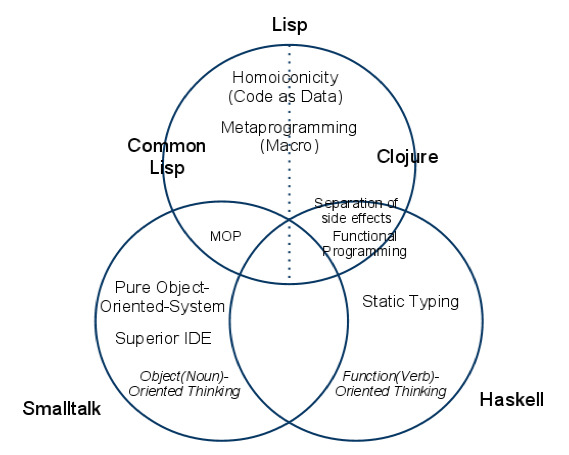

This diagram shows clearly the difference between "traditional" Lisp, and its modern offshoots. It's important to emphasize that Common Lisp is not functional - although it certainly can use this style.

And so the median language, meaning whatever language the median programmer uses, moves as slow as an iceberg. Garbage collection, introduced by Lisp in about 1960, is now widely considered to be a good thing. Runtime typing, ditto, is growing in popularity. Lexical closures, introduced by Lisp in the early 1970s, are now, just barely, on the radar screen. Macros, introduced by Lisp in the mid 1960s, are still terra incognita.

– Paul Graham, Beating the Averages

What will we see at the intersection of these three?

Interesting things macros have been used for

Early AI programs

Lisp used to have a reputation as an "AI language" (whatever that means). It's not hard to understand when you look at PAIP and its implementations of early AI programs:

- General Problem Solver (planning software)

- ELIZA (for Emacs users, M-x doctor)

- Macsyma (early computer algebra software - think MATLAB/Maple)

What all these have in common is their reliance on pattern-matching.

These programs are still worth studying for their historical significance, and its techniques are well-known in the area of automated planning.

Meta-object protocol (CLOS)

An interesting result of this malleability is that Lisp can be made object-oriented if need be. And this has been done…and written in Lisp, unsurprisingly.

The Common Lisp Object System, or CLOS for short, is the result of this. It has many advanced features, one of which is multi dispatch, also known as multimethods:

; declare the common argument structure prototype (defgeneric f (x y)) ; define an implementation for (f integer t), where t matches all types (defmethod f ((x integer) y) 1) (f 1 2.0) ; => 1 ; define an implementation for (f integer real) (defmethod f ((x integer) (y real)) 2) (f 1 2.0) ; => 2 ; dispatch changed at runtime

There's a somewhat obscure book detailing the internals of its extension language, called The Art of the Metaobject Protocol. I encourage you to grab a copy if you want to learn more about this, as it's a really interesting topic.

The venerable master Qc Na was walking with his student, Anton. Hoping to prompt the master into a discussion, Anton said "Master, I have heard that objects are a very good thing - is this true?" Qc Na looked pityingly at his student and replied, "Foolish pupil - objects are merely a poor man's closures."

Chastised, Anton took his leave from his master and returned to his cell, intent on studying closures. He carefully read the entire "Lambda: The Ultimate…" series of papers and its cousins, and implemented a small Scheme interpreter with a closure-based object system. He learned much, and looked forward to informing his master of his progress.

On his next walk with Qc Na, Anton attempted to impress his master by saying "Master, I have diligently studied the matter, and now understand that objects are truly a poor man's closures." Qc Na responded by hitting Anton with his stick, saying "When will you learn? Closures are a poor man's object." At that moment, Anton became enlightened.

If you want a real-life explanation of such techniques, look no further than Let over lambda, named after a simple explanation of this technique. The book has most chapters freely available online, but the dead tree version is worth it if only for its discussion on performance tweaks for Lisp macros.

FPGA code generation

There is a blog, later turned book, that features interviews with proeminent Lisp hackers. One of them is Marc Battyani, who founded 2 companies that write compilers from Lisp to VHDL, to power FPGA devices that are used in business applications such as financial algorithms, signal processing applications and many others.

For you to have an idea of how fast FPGA is:

For instance on 10Gb/s Ethernet market data packets coming from exchanges like the NASDAQ we process the IP/UDP/multicast network stack, extract the messages from the packets, parse/decode/filter/normalize those messages, maintain the indexed order book data structures, aggregate the price levels per stock, generate output messages and finally send them to a server through PCI-express or 10Gb/s Ethernet network stacks. The nice thing is that we do all that fully pipelined at a rate of one message every 12 nanoseconds! To have an idea of how fast it is, in 12 ns the light will only travel 3.6 meters (11.8 ft). Another way to view this performance is that the system can process 83 Millions of financial messages per second without any queuing.

The way they use their internal compiler is also impressive. From the interview:

The next step is very often to generate some or all the code in other languages by designing various domain specific languages (DSL) which will take care of the tedious aspects of programming in less powerful languages. I really like it when from a few 100s of lines written in a easy to use high level DSL we generate 10000 to 60000+ lines of very low level VHDL code saving months of development.

About that point I think I would even say that I'm mostly doing Language Oriented Programming by trying to abstract the domain specific knowledge into various domain specific languages with very different syntaxes like s-expressions, C like or other languages or even sometimes adding to the mix some GUI or data-based inputs. Then those DSLs would generate most if not all the application code in whatever language is needed be it VHDL, asm, C, javascript or Common Lisp.

Rewriting a test suite in 24h

In this blog post, a CircleCI dev shows how s/he used Clojure macros to "rewrite" their 5k LoC test suite from one test library to another. The interesting fact is that, instead of rewriting the source files to conform to the new API, the dev used macros to translate the old API to the new one automatically.

The blog post is worth reading on its own, but the main highlights are:

They used the built-in pattern-matching library, core.match, to implement the actual rewrite rules.

One example:

(match [actual arrow expected] [actual '=> 'truthy] `(is ~actual) [actual '=> expected] `(is (= ~expected ~actual)) [actual '=> (_ :guard regex?)] `(is (re-find ~contents ~actual)) [actual '=> nil] `(is (nil? ~actual)))

A few cases broke and required manual correction

This shows that macros are no silver bullet. They still got the 90% solution working in 24h, though. Not too shabby for a 5k LoC test suite.

Metaprogramming in other languages

C / C++

C (and its sucessor C++) is (in)famous for its text-level macros, that work by basic string replacement inside source code. While useful for simpler purposes, their usage gets confusing and "dangerous" very quickly. For example, using this well-known example by Mark Jason Dominus:

#define square(x) x*x /* this won't work: 2/square(10) = 2/10*10 = 2, but you wanted 0.02 */ #define square(x) (x*x) /* this won't work: square(1+1) = (1+1*1+1) = 3, but you wanted 4 */ #define square(x) ((x)*(x)) /* x =3D 2; square(x++) = ((x++)*(x++)) = 159.8, but you wanted 4 */

This happens because this is a source-level transformation, with no awareness at all of the syntactic structure of the C language. Given that the macro preprocessor doesn't have access to a parser, it doesn't know how to identify if the transformation it is performing is well-formed.

This gets a little better with C++ templates, which are proven to be Turing-equivalent, but nothing is said about their run-time complexity. It's possible to write a non-terminating template too… (but you can do that in Lisp, too ;))

C#

The C# language, which is thought by some to be M$'s response to Java, included a facility for writing MSIL (C# bytecode) directly in the language.

Later versions included a syntactic convenience - instead of directly writing bytecode, it's now possible to write something similar to s-exps through Expression Tree objects.

From this interesting infosec example from a C# consultancy:

What’s interesting about WarCraft 3’s revision check is that the challenge sent by the server is the hash function itself, as opposed to a prefix/suffix (Blizzard’s crypto is always “interesting”, sometimes with hilarious results). Each time a client connects, the server sends a description of a different hash function to apply. For example, you might receive the challenge “A=443747131 B=3328179921 C=1040998290 4 A=A^S B=B-C C=C^A A=A+B”, which describes how to initialize the hash function’s state (A,B,C) and the four operations to apply for each value of S read from the game files.

The code for compiling a particular expression tree for one of these hashes follows:

var example = new DynamicHasher {

InitialState = new[] { 0, 443747131, 332817992, 1040998290 },

Steps = new[] {

// A += input

new DynamicHasher.Step {

LeftInputIndex = 1,

RightInputIndex = 0,

Operation = DynamicHasher.Operation.Xor,

OutputIndex = 1

},

// B -= C

new DynamicHasher.Step {

LeftInputIndex = 2,

RightInputIndex = 3,

Operation = DynamicHasher.Operation.Subtract,

OutputIndex = 2

},

// C ^= A

new DynamicHasher.Step {

LeftInputIndex = 3,

RightInputIndex = 1,

Operation = DynamicHasher.Operation.Xor,

OutputIndex = 3

},

// A += B

new DynamicHasher.Step {

LeftInputIndex = 1,

RightInputIndex = 2,

Operation = DynamicHasher.Operation.Add,

OutputIndex = 1

}

}

};

Results are as follows:

Generating dynamically specialized code... Done after 45ms Timing interpretation vs dynamically generated code of example hash... Interpreted: 1622ms, Specialized: 1109ms Interpreted: 1536ms, Specialized: 1106ms Interpreted: 1532ms, Specialized: 1104ms Interpreted: 1538ms, Specialized: 1105ms Interpreted: 1540ms, Specialized: 1103ms Interpreted: 1531ms, Specialized: 1105ms Interpreted: 1542ms, Specialized: 1104ms Interpreted: 1534ms, Specialized: 1105ms Interpreted: 1547ms, Specialized: 1103ms Interpreted: 1545ms, Specialized: 1102ms (Note that the 45ms delay to generate the code is misleading. It includes a ton of static initialization and warmup costs. The next function to be compiled takes less than a millisecond to complete. However, since I cared about time-to-first-login at the time, I’m going to count the inflated value.)

Not bad for such a small piece of code, right?

Elixir

Elixir is a "sister" language to Ruby (features similar syntax, but very different semantics) that compiles down to Erlang bytecode (for the BEAM VM). The original Erlang implementation offers "support" for that in the form of the parse_transform function, but Elixir turns it up a few notches by offering a backquote-inspired syntax for macros.

A simple example:

defmodule Unless do defmacro macro_unless(clause, do: expression) do quote do if(!unquote(clause), do: unquote(expression)) end end end

This implements the unless construct that is also built-in in Ruby. Notice that the body of the expression will only be evaluated if clause yields a falsey value - exactly the behavior we want for a macro.

While more approachable than the other solutions presented, it's still a bit inconvenient to manipulate (but not much).

What is a high-level language, really?

As you can see, Lisp's metaprogramming facility blurs the line between a high and a low-level language. Given that we can manipulate our programs in every level, from a very high-level language such as Prolog (or miniKanren) to bit-fiddling and assembly-generating code, are Lisps high or low level languages? When the programmer is also language designer, does it really matter?